Controlling the output of generative AI system is very important for safety, trust, compliance and meaningful human AI collaboration.

As most of the businesses, creators and governments mostly rely on AI-generated content, it becomes critical to manage accuracy, bias, privacy, ethical outcomes and real world scenarios.

It's very critical to understand why controlling the generative AI systems is important as it helps organizations to use AI responsibly and strategically.

Why Is Controlling The Output Of Generative AI Systems Important?

Generative AI systems has transformed different tasks are performed like content, design, software, communications and intelligence tasks are performed.

Without proper controls, AI output can be misleading, harmful, biased and legally risky.

That is why its crucial to have an understanding of generative AI outputs, as it is centre of ethical AI adoption, user safety and sustainable innovation.

Why Is Controlling The Output Of Generative AI Systems Important For Accuracy And Truthfulness?

One of the most critical reasons why controlling the output of generative AI systems is important is to maintain accuracy, factual integrity, and reliability.

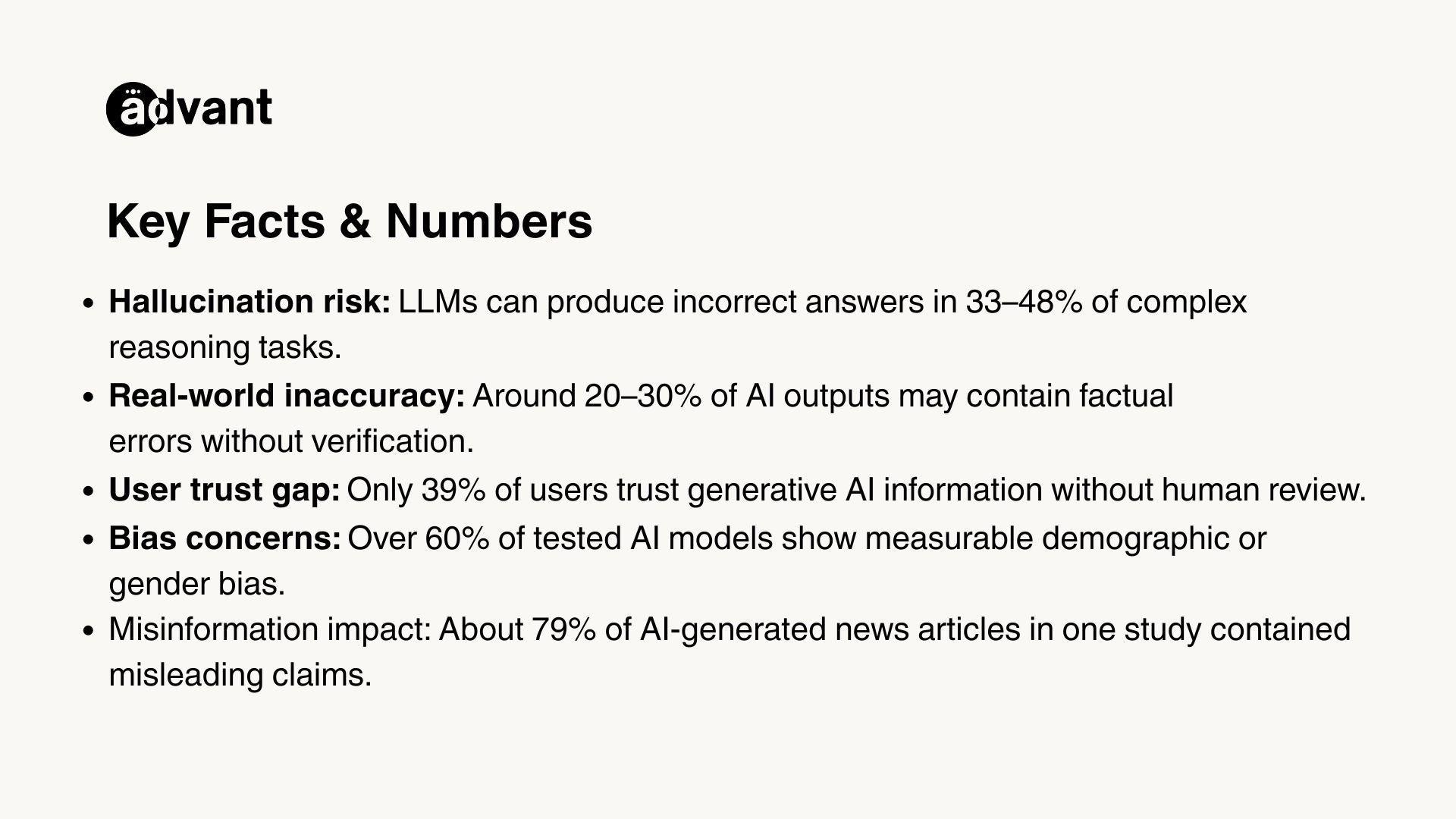

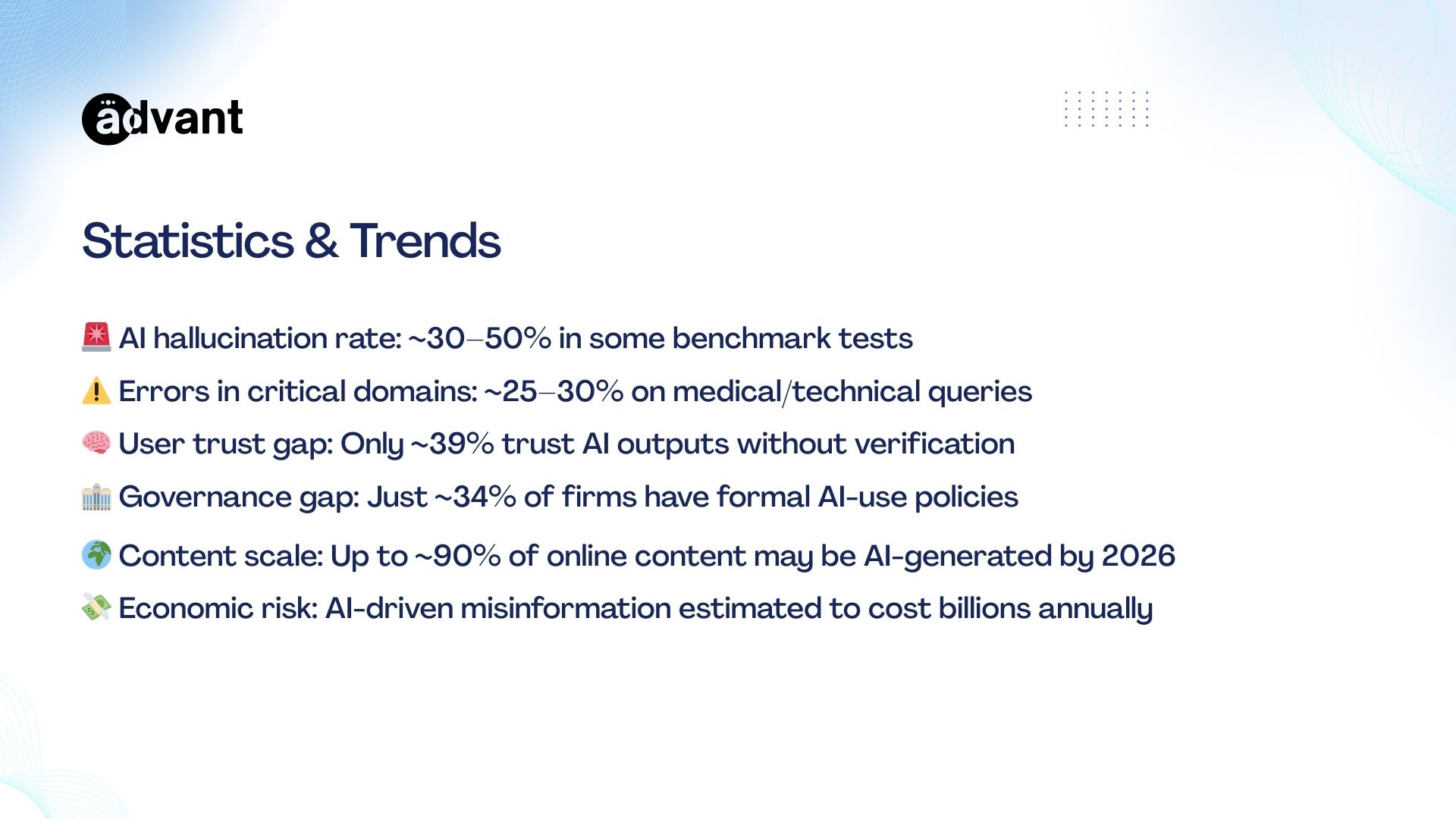

Generative AI models sometimes produce hallucinations, fabricated details, or misleading content. Implementing control mechanisms ensures that AI-generated information remains useful, verifiable, and aligned with real-world truth.

Generative AI can create convincing text, images, and insights but not all generated outputs are correct.

When AI delivers inaccurate results in domains like healthcare, finance, policy, or research, the consequences can be severe.

That is why organizations prioritize structured oversight.

Key reasons accuracy control is essential include:

Prevents Misleading Information From Being Distributed

Protects Businesses From Reputational Damage

Ensures Users Receive Reliable And Verifiable Outputs

Reduces The Risk Of AI Hallucinating False Facts

Supports Decision-Making With Trusted Data

Maintaining truthfulness is a core answer to why controlling the output of generative AI systems is important in professional environments.

Why Is Controlling The Output Of Generative AI Systems Important For Ethical And Fair Use?

Ethics still remains the foundational reason to control the output of generative AI systems. AI systems may reinforce stereotypes unintentionally, result in harmful narratives, or amplify societal bias.

Different government frameworks and human in loop ensures fairness, responsibility, representation across cultural values, demographics and contexts.

To learn various patterns Generative AI rely on massive datasets and some of those patterns contain historical bias. Without proper output regulation, AI may reproduce discriminatory results or rarefied behaviour.

Ethical control protects society through measures such as:

Ensuring AI Treats All Demographic Groups Fairly

Reducing Harmful Or Offensive Content Generation

Preventing Bias From Influencing Automated Decisions

Supporting Culturally Sensitive Representations

Aligning AI Systems With Human Values

This reinforces why controlling the output of generative AI systems is important from a social responsibility perspective.

Why Is Controlling The Output Of Generative AI Systems Important For Security And Risk Prevention?

Security-focused oversight explains another dimension of why controlling the output of generative AI systems is important.

AI models can unintentionally generate harmful instructions, sensitive data leaks, or content that supports fraud or manipulation.

By controlling output, organizations prevent misuse and ensure AI systems cannot be exploited for malicious purposes.

Generative AI can be misused in high-risk contexts such as phishing, impersonation, cyber-scams, or weapon design. Output restrictions help establish security boundaries.

Security-driven controls help to:

Block Harmful Or Dangerous Instruction Generation

Reduce Fraudulent Or Misleading Identity Outputs

Prevent Exposure Of Sensitive Or Confidential Data

Protect Users From Manipulative Or Deceptive Content

Stop AI From Supporting Illegal Or Unsafe Behavior

Security safeguards strongly support why controlling the output of generative AI systems is important to protect people, platforms, and infrastructure.

Why Is Controlling The Output Of Generative AI Systems Important For Legal And Regulatory Compliance?

There is a major role of legal accountability in explaining controlling the output of generative AI system is important.

Organizations must comply with laws related to copyright, data governance, privacy regulations and emerging AI governance frameworks.

Controlled AI outputs reduces legal exposure and ensures compliant deployment with responsibility.

Global regulations increasingly focusing on accountability, transparency and risk control as ao adoption is expanding rapidly. Failure to manage these outputs can lead to serious legal disputes and penalties.

Compliance-oriented controls help businesses:

Prevent Copyright Or Intellectual Property Violations

Ensure AI Does Not Reproduce Protected Data

Comply With Data Privacy And Governance Laws

Maintain Documentation For AI Decision Processes

Demonstrate Responsible AI Usage Standards

Why Is Controlling The Output Of Generative AI Systems Important For Brand Trust And Reputation?

Brand credibility is directly related to generative AI output control. Organizations that deploys AI generated content that is meaningful, low quality, inaccurate or insensitive can damage their own brand image.

Proper output control ensures the messaging remains consistent with brand voice, values and user expectations.

When a users interact with AI tools, they associate those results and experience with the organization providing them that experience.

Output governance strengthens brand trust by:

Maintaining Consistent Tone And Brand Messaging

Ensuring Professional-Quality Content Output

Preventing Embarrassing Or Harmful Public Errors

Building Long-Term Customer Confidence

Supporting Authentic And Transparent Communication

Why Is Controlling The Output Of Generative AI Systems Important For Human-AI Collaboration?

Human-AI collaboration highlights another perspective on why controlling the output of generative AI systems is important.

Controlled output allows AI to function as an assistant rather than a fully autonomous agent, ensuring that human judgement, creativity and oversight remain central to right decision making process.

Generative AI performs most effective when humans review, refine and guide its output, not when it operates uncertain or unchecked.

Controlled collaboration enables:

Enhanced Human Creativity Through Guided AI Support

Higher-Quality Results Through Expert Review

Clear Responsibility For Final Decisions

Improved Productivity With Safer Automation

Balanced Interaction Between Human Insight And AI Speed

This human-centered approach reinforces why controlling the output of generative AI systems is important in modern workflows.

Why Is Controlling The Output Of Generative AI Systems Important For Responsible Innovation And Long-Term Adoption?

Sustainable innovation explains another key reason why controlling the output of generative AI systems is important. When AI operates responsibly, society builds confidence in its potential.

This creates space for broader adoption across industries while minimizing risk, uncertainty, and public resistance to emerging technologies.

Responsible innovation does not oppose progress, it strengthens it.

Long-term AI adoption benefits from:

Building Public Trust Through Safe AI Practices

Encouraging Transparent And Accountable AI Development

Supporting Scalable And Sustainable Innovation

Reducing Ethical And Operational Risks Over Time

Creating Stable Foundations For Future AI Advancements

Best Practices For Controlling The Output Of Generative AI Systems

To fully address importance of controlling the output of generative AI systems, organizations must implement structured governance strategies.

These best practices help reduce risk, improve output quality, and ensure AI systems operate in alignment with organizational values, regulations, and user expectations.

Key best practices include:

Implement Human-In-The-Loop Review Processes

Define Ethical And Safety Filters For AI Output

Use Domain-Specific Fine-Tuning And Constraints

Log And Audit AI Outputs For Accountability

Provide Clear User Guidelines And Disclaimers

Regularly Test AI Models For Bias And Error

Align AI Use Cases With Legal Requirements

Train Teams On Responsible AI Governance

These practices ensure practical and meaningful control over AI-generated results.

Read More:What Purpose Do Fairness Measures Serve in AI Product Development?

Conclusion: Why Is Controlling The Output Of Generative AI Systems Important?

Controlling the output of generative AI systems is important because it protects users, organizations, and society from risk while enabling reliable, ethical, and trustworthy innovation.

By managing accuracy, fairness, safety, legality, and collaboration, we ensure generative AI strengthens human capability rather than replacing or harming it.

In today’s rapidly evolving digital ecosystem, why is controlling the output of generative AI systems important is no longer just a theoretical question, it is a practical necessity.

Responsible control promotes safety, transparency, and sustainability, allowing generative AI to deliver meaningful value across industries.

FAQ 1: What Does Controlling The Output Of Generative AI Systems Mean?

Answer: Controlling the output of generative AI systems means applying filters, guidelines, review mechanisms, and governance rules to ensure the content produced is accurate, ethical, safe, and compliant. It involves monitoring how AI generates text, images, and insights to prevent misinformation, bias, harmful content, or misuse in real-world applications.

FAQ 2: Why Is It Important To Regulate AI Output In High-Risk Domains?

Answer: Regulating AI output in high-risk domains such as healthcare, finance, security, and legal environments is essential because incorrect or misleading outputs can cause serious harm. Output control reduces the risk of false information, unsafe recommendations, or unintended consequences that may impact individuals, organizations, or public systems.

FAQ 3: How Does Output Control Improve Trust In Generative AI Systems?

Answer: Output control improves trust by ensuring that AI-generated content remains reliable, responsible, and transparent. When organizations review and validate AI outputs, users develop greater confidence in its credibility. This strengthens brand reputation, enhances user experience, and encourages broader acceptance of AI-powered tools and technologies.

FAQ 4: Can Controlling AI Output Help Prevent Bias And Ethical Risks?

Answer: Yes, controlling AI output plays a major role in minimizing bias, discrimination, and ethical risks. AI systems may unintentionally reinforce social stereotypes or reproduce biased data patterns. Output regulation ensures fairness, inclusivity, cultural sensitivity, and alignment with ethical standards across different user groups and applications.

FAQ 5: What Are The Best Practices For Controlling Generative AI Output?

Answer: Some of the most effective best practices include:

Implementing Human-In-The-Loop Review Models

Applying Ethical And Safety Filters To AI Outputs

Auditing And Logging Generated Content For Accountability

Fine-Tuning Models With Domain-Specific Constraints

Testing AI Systems For Bias, Accuracy, And Consistency

These practices ensure that generative AI systems operate safely, responsibly, and in alignment with organizational values.